Homepage for Heads-Up Computing

“Smartphone zombie”

Ever had a love-hate relationship with your smartphone? The mobile interaction paradigm has revolutionized how information can be accessed ubiquitously, allowing us to stay connected anytime and anywhere. However, the current ‘device-centered’ design of smartphones demands constant physical and sensorial user engagement, where humans need to adapt their bodies to accommodate the use of computing devices. We do so unknowingly by adopting an unnatural posture, such as by holding up our smartphones with our hands, and looking down at our screens. The eyes-and-hands-busy nature of mobile interactions not only imposes constraints on how we engage with our everyday activities, but undermines our ability to be situationally aware (hence, the “smartphone zombie” or “heads-down” phenomenon).

Our vision: Heads-Up Computing

Heads-Up Computing is our ambitious vision to fundamentally change the way we interact with technology, to shift into a more ‘human-centered’ approach. The Metaverse has gained widespread popularity, and we resonate with the vision of creating seamless digital experiences that can enhance daily life, though there are some notable differences between Heads-Up computing and the metaverse proposed by Meta (formally Facebook). Mainly, our research centers primarily on human-computer interactions that help users manage day-to-day digital information. Heads-Up computing does not aim to replace physical spaces with virtual reality, but rather, augment and bridge the physical world with virtual spaces via augmented reality. In doing so, we aim to facilitate connections between humans and the digital-physical worlds.

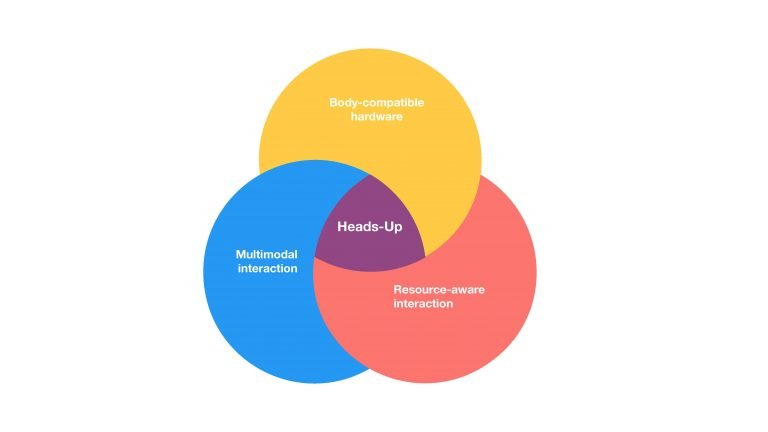

Heads-Up computing aims to provide seamless, just-in-time, and intelligent computing support for humans’ daily activities. The Heads-Up paradigm offers a style of interaction that is compatible with natural human movements, such that users can continue to behave intuitively around their devices. Heads-Up computing places humans at the center stage by focusing on the immediate perceptual space of users (i.e. ego-centric view). Heads-Up Computing is characterized by 3 main components:

1) Body-compatible hardware components. Heads-Up distributes the input and output modules of devices to more naturally match the human input and output channels. Leveraging our two most important sensing/actuating hubs, the head and hands, Heads-Up introduces a quintessential design that comprises the head-piece and hand-piece. In particular, smart-glasses (Optical See-Through Head-Mounted Displays or OHMDs) and earphones will directly provide visual and audio output to the human eyes and ears. Likewise, a microphone will receive audio input from humans, while a hand-worn device (i.e. ring, wristband) will be used to receive manual input.

2) Multimodal voice and gesture interaction. Heads-Up utilizes multimodal input/output channels to facilitate multi-tasking scenarios. Voice input and thumb-index-finger gestures are examples of interactions we explore as part of the Heads-Up paradigm.

3) Resource-aware interaction model. This is a software framework that allows the system to understand when to use which human resource. By applying deep learning approaches to audio and visual recordings, for example, the user and environmental status can be inferred. Essential factors such as whether the user is in a noisy place can influence their ability to absorb information, thus, it is integral for the system to sense and recognize the user’s immediate perceptual space. Such a model will predict human perceptual space constraints and primary task engagement, then deliver just-in-time information to and from the head- and hand-piece.

For more details on related studies, please refer to our publication section.

Media

CNA NEWS FEATURE

Prof. Zhao Shengdong shares more about the Heads-Up Computing vision with CNA Insider - “Metaverse: What The Future Of Internet Could Look Like”

Official lab Heads-Up video

Presentation Slides

Our slides contain more information on the NUS-HCI Lab and Heads-Up Vision are available for download here.

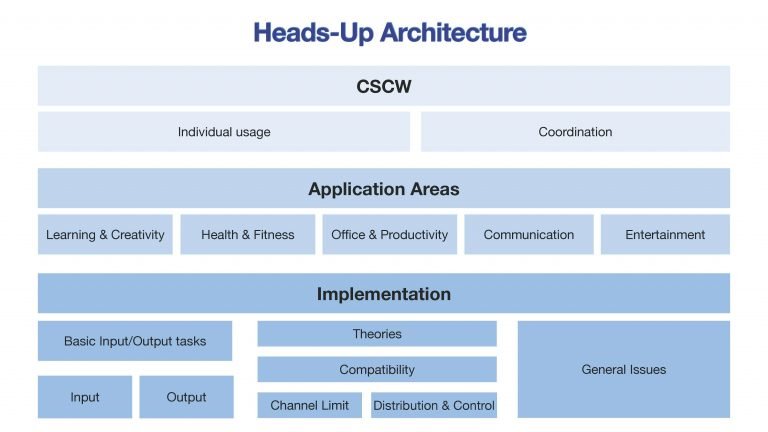

Our Topics

Our Heads-Up research directions include foundational topics as well as their applications. We expect this to evolve and grow over time. Below, we include some examples of current work:

Notifications

Present notifications effectively on OHMD to minimize distractions to primary tasks (e.g. notification display for conversational settings)

Resource interaction model

Establish how different situations affect our ability to in-take information on different input channels. Understand what input methods are comfortable and non-intrusive to users’ everyday tasks.

Monitoring Attention

Monitor attention state fluctuations continuously and reliably via EEGs (e.g. during video-learning scenarios)

Education & Learning

Design learning videos on OHMD for on-the-go situations, effectively distributing users’ attention between learning and walking tasks. Support microlearning in mobile scenarios (mobile information acquisition).

Healthcare & Wellness

Facilitate OHMD-based mindfulness practice that is highly accessible, convenient, and easy for novice practitioners to implement in casual everyday settings

Text Presentation

Explore text display design factors for optimal information consumption on OHMDs (e.g. in mobile environments)

Developer/Research tools for Heads-Up applications

Provide testing and development tools to help researchers and developers build Heads-Up applications, streamlining their work process (e.g. learning video-builder)

Past Publications

Recent publications

Given Heads-Up is a new paradigm, how do users input?

- We propose a voice + gesture multimodal interaction paradigm, in which voice input is mainly responsible for input text; voice + gesture input from wearable hand controllers (i.e., interactive ring)

- Voice-based text input and editing. When users are engaged in daily activities, their eyes and hands are often busy; thus we believe voice input is a better modality for inputting text information for Heads-Up computing. However, we don’t just input text, editing is a big part of text processing. Editing text using voice alone is known to be a very challenging problem. Eyeditor is a solution we have come up with to support mobile voice-based text editing. It uses voice re-dictation to correct text, and a wearable ring mouse to perform finer adjustment to text.

- EYEditor: On-the-Go Heads-up Text Editing Using Voice and Manual Input

- While the paper above is about text input using voice input in general, we also share an application scenario on how to write about one’s experience in an in-situ fashion using voice-based multimedia input - LiveSnippets: Voice-Based Live Authoring of Multimedia Articles about Experiences

- Eyes-free touch-based text input as a complementary input technique. In case, voice-based text input is not convenient (in places in which quietness is required), we also have a technique (in collaboration with Tsinghua University) to allow you to type in text in an eyes-free fashion (not in the sense of don’t have a visual display, but rather, it does not need users to look at the keyboard to input the text. This allows the user to maintain a heads-up, hands-down posture while input text into a smart glasses display.

- Blindtype: Eyes-Free Text Entry on Handheld Touchpad by Leveraging Thumb’s Muscle Memory

- Interactive ring as complimentary input technique for command selection and spatial referencing. Voice input has its limitations, as some information is inherently spatial. We also need a device that can perform simple selection as well as 2D or 3D pointing operations. However, it’s not known what’s the best way for users to perform such operations in a variety of daily scenarios. While there might be different interaction techniques that are considered most optimal for different scenarios, users are unlikely willing to carry multiple devices or learn multiple techniques on a daily basis, so what will be the best cross-scenario device/technique to perform command selection and spatial referencing? We conducted a series of experiments to evaluate different alternatives that can perform synergistic interactions under the Heads-Up computing scenarios, and we found that an interactive ring stands-out as the best cross-scenario input technique for selection and spatial referencing for Heads-Up computing. Refer to the following paper for more details

- Ubiquitous Interactions for Heads-Up Computing: Users’ Preferences for Subtle Interaction Techniques in Everyday Settings

HOW TO OUTPUT?

The goal of Heads-Up computing is to support users’ current activity with just-in-time, intelligent support, either in the form of digital content or potentially physical help (i.e., using robots). When users are engaged in an existing task, presenting information to users will ultimately lead to multi-tasking scenarios. While multitasking with simple information is easier, in some cases, the best information support might be in the form of dynamic information, so one question arises is how to best present dynamic information to users in multi-tasking scenarios. After a series of studies, we have come up with a presentation style that’s more suitable for displaying dynamic information to users called LSVP. We have also investigated the use of paracentral and near-peripheral vision for information presentation on OHMDs/smart-glasses during in-person social interactions. Read the following paper for more details.

- LSVP: On-the-Go Video Learning Using Optical Head-Mounted Displays

- Paracentral and near-peripheral visualizations: Towards attention-maintaining secondary information presentation on OHMDs during in-person social interactions

Older publications on wearable solutions and multimodal interaction techniques that are foundational to Heads-Up computing:

Tools, Guides and Datasets

- Video Dataset: OHMD-based Studies or Video Learning Experiments

This is the video dataset used in our study “LSVP: Towards Effective On-the-go Video Learning Using Optical Head-Mounted Displays”. The folder contains video materials that can be used to conduct video learning-based experiments on Optical Head-Mounted Displays (OHMDs or smart glasses) OHMDs or any within-subject design in general.

- GitHub source code: Progress notifications on OHMD

This source code contains OHMD progress bar notifications (Progress bar types: Circular, Textual, and Linear) used in our study “Paracentral and near-peripheral visualizations: Towards attention-maintaining secondary information presentation on OHMDs during in-person social interactions”. It includes notification triggering implementation (Python) and UI implementation (Unity).

- GitHub source code: Eyeditor

This includes the text editing tool used in our study “EYEditor: Towards On-the-Go Heads-Up Text Editing Using Voice and Manual Input”. It utilizes voice and manual input methods (via a ring mouse).

- GitHub source code: HeadsUp Glass

This is an Android app framework for smart-glasses.

NOTE: You may also refer to the NUS-HCI lab’s overall Github repository.

Kindly cite the original studies when using these resources for your research paper.

- Guidance for Controlling Xiaomi’s IoT devices using python

This is a guide for using python to control Xiaomi’s IoT project. Sample codes will be released after the related paper publishing.

Main Participants

We thank many others who have collaborated with us on our projects in some capacity or other.